Difference between revisions of "2019Q3 Reports: NAACL 2019"

ChristyDoran (talk | contribs) |

|||

| (30 intermediate revisions by 2 users not shown) | |||

| Line 201: | Line 201: | ||

* Video Poster Highlights | * Video Poster Highlights | ||

| − | : This year included one minute slides with pre recorded audio that showcase the posters to be presented that day. The goal was to provide more visibility to posters. These were shown during the welcome reception, breakfast and breaks. | + | : This year included one minute slides with pre recorded audio that showcase the posters to be presented that day. The goal was to provide more visibility to posters. These were shown during the welcome reception, breakfast and breaks. A/V failures the first day of the conference have made it hard to assess effectiveness. |

* Remote Presentations | * Remote Presentations | ||

| Line 210: | Line 210: | ||

** preferred pronouns (optionally) added to badges | ** preferred pronouns (optionally) added to badges | ||

** I’m hiring/I’m looking for a job/I’m new badge stickers | ** I’m hiring/I’m looking for a job/I’m new badge stickers | ||

| − | ** | + | ** Link to D&I report will be included when it is available. |

* Two-stage Submissions | * Two-stage Submissions | ||

| Line 218: | Line 218: | ||

* Full papers available for bidding: reviewers loved it, authors did not | * Full papers available for bidding: reviewers loved it, authors did not | ||

| + | |||

| + | * Student Research Papers | ||

| + | : talks and posters from the SRW were integrated into the main conference program. Positive feedback was received about this, better experience for students. | ||

= Submissions rates and distributions = | = Submissions rates and distributions = | ||

Authors were permitted to switch format (long/short) when they submitted the full papers, so the total in the chart below uses 2271 as the total number of submissions, discounting the 103 that never submitted a full paper in the second phase. Seventy nine papers were desk-rejected due to anonymity, formatting, or dual-submission violations; 456 papers withdrawn prior to acceptance decisions being sent, although some were withdrawn part way through the review process; and an additional 11 papers were withdrawn after acceptance notifications had been sent. Keeping the acceptance rate consistent with past years meant 5 parallel tracks were needed to fit more papers into 3 days--as the conference grows, decisions will have to be made about continuing to add more tracks, adding more days to the main conference, or lowering the acceptance rate. The overall technical program consisted of 423 main conference papers, plus 9 TACL papers, 23 SRW papers, 28 Industry papers, and 24 demos. The TACL and SRW papers were integrated into the program, and marked SRW or TACL accordingly. | Authors were permitted to switch format (long/short) when they submitted the full papers, so the total in the chart below uses 2271 as the total number of submissions, discounting the 103 that never submitted a full paper in the second phase. Seventy nine papers were desk-rejected due to anonymity, formatting, or dual-submission violations; 456 papers withdrawn prior to acceptance decisions being sent, although some were withdrawn part way through the review process; and an additional 11 papers were withdrawn after acceptance notifications had been sent. Keeping the acceptance rate consistent with past years meant 5 parallel tracks were needed to fit more papers into 3 days--as the conference grows, decisions will have to be made about continuing to add more tracks, adding more days to the main conference, or lowering the acceptance rate. The overall technical program consisted of 423 main conference papers, plus 9 TACL papers, 23 SRW papers, 28 Industry papers, and 24 demos. The TACL and SRW papers were integrated into the program, and marked SRW or TACL accordingly. | ||

| − | |||

| − | + | {| class="wikitable" | |

| − | + | ! | |

| − | + | ! style="text-align:left;"| Long | |

| − | + | ! style="text-align:left;"| Short | |

| − | + | ! style="text-align:left;"| Total | |

| − | + | ! style="text-align:left;"|TACL | |

| − | + | |- | |

| − | Accepted as talk | + | | Reviewed |

| − | Accepted as poster | + | | 1067 |

| − | Total Accepted | + | | 666 |

| − | + | | 1733 | |

| − | + | | | |

| − | + | |- | |

| + | | Accepted as talk | ||

| + | | 140 | ||

| + | | 72 | ||

| + | | 212 | ||

| + | | 4 | ||

| + | |- | ||

| + | | Accepted as poster | ||

| + | | 141 | ||

| + | | 70 | ||

| + | | 211 | ||

| + | | 5 | ||

| + | |- | ||

| + | | Total Accepted | ||

| + | | 281 (26.3%) | ||

| + | | 142 (21.3%) | ||

| + | | 423 (24.4%) | ||

| + | | 9 | ||

| + | |} | ||

== Detailed statistics by area == | == Detailed statistics by area == | ||

| − | + | {| class="wikitable" | |

| − | + | ! style="text-align:left;"| Area | |

| − | Area | + | ! style="text-align:left;"| Long (%) |

| − | Long (%) | + | ! style="text-align:left;"| Short (%) |

| − | Short (%) | + | |- |

| − | + | | Bio and clinical NLP | |

| − | + | | 7 (57) | |

| − | + | | 28 (17) | |

| − | Bio and clinical NLP | + | |- |

| − | 7 (57) | + | | Question Answering |

| − | 28 (17) | + | | 73 (36) |

| − | Question Answering | + | | 41 (17) |

| − | 73 (36) | + | |- |

| − | 41 (17) | + | | Cognitive modeling |

| − | Cognitive modeling | + | | 24 (29) |

| − | 24 (29) | + | | 14 (14) |

| − | 14 (14) | + | |- |

| − | Resources and Evaluation | + | | Resources and Evaluation |

| − | 33 (27) | + | | 33 (27) |

| − | 20 (20) | + | | 20 (20) |

| − | Dialog and Interactive systems | + | |- |

| − | 64 (20) | + | | Dialog and Interactive systems |

| − | 18 (27) | + | | 64 (20) |

| − | Semantics | + | | 18 (27) |

| − | 80 (13) | + | |- |

| − | 42 (11) | + | | Semantics |

| − | Discourse and Pragmatics | + | | 80 (13) |

| − | 38 (21) | + | | 42 (11) |

| − | + | |- | |

| − | Sentiment Analysis | + | | Discourse and Pragmatics |

| − | 32 (28) | + | | 38 (21) |

| − | 40 (20) | + | | 11 (36) |

| − | Ethics, Bias and Fairness | + | |- |

| − | 16 (25) | + | | Sentiment Analysis |

| − | 12 (50) | + | | 32 (28) |

| − | Social Media | + | | 40 (20) |

| − | 44 (18) | + | |- |

| − | 41 (36) | + | | Ethics, Bias and Fairness |

| − | Generation | + | | 16 (25) |

| − | 46 (14) | + | | 12 (50) |

| − | 19 (23) | + | |- |

| − | Speech | + | | Social Media |

| − | 19 (31) | + | | 44 (18) |

| − | 9 (33) | + | | 41 (36) |

| − | Information Extraction | + | |- |

| − | 46 (28) | + | | Generation |

| − | 16 (12) | + | | 46 (14) |

| − | Style | + | | 19 (23) |

| − | 24 ( (25) | + | |- |

| − | 16 (25) | + | | Speech |

| − | Information Retrieval | + | | 19 (31) |

| − | 22 (22) | + | | 9 (33) |

| − | 13 (30) | + | |- |

| − | Summarization | + | | Information Extraction |

| − | 22 (27) | + | | 46 (28) |

| − | 28 (28) | + | | 16 (12) |

| − | Machine Learning for NLP | + | |- |

| − | 100 (29) | + | | Style |

| − | 22 (22) | + | | 24 ( (25) |

| − | Syntax | + | | 16 (25) |

| − | 36 (52) | + | |- |

| − | 54 (13) | + | | Information Retrieval |

| − | Machine Translation | + | | 22 (22) |

| − | 49 (30) | + | | 13 (30) |

| − | 53 (18) | + | |- |

| − | Text Mining | + | | Summarization |

| − | 101 (18) | + | | 22 (27) |

| − | 29 (24) | + | | 28 (28) |

| − | Multilingual NLP | + | |- |

| − | 43 (25) | + | | Machine Learning for NLP |

| − | 28 (10) | + | | 100 (29) |

| − | Theory and Formalisms | + | | 22 (22) |

| − | 12 (58) | + | |- |

| − | 12 (16) | + | | Syntax |

| − | NLP Applications | + | | 36 (52) |

| − | 60 (30) | + | | 54 (13) |

| − | 41 (17) | + | |- |

| − | Vision & Robotics | + | | Machine Translation |

| − | 41 (12) | + | | 49 (30) |

| − | 22 (36) | + | | 53 (18) |

| − | Phonology | + | |- |

| − | 24 (33) | + | | Text Mining |

| − | + | | 101 (18) | |

| + | | 29 (24) | ||

| + | |- | ||

| + | | Multilingual NLP | ||

| + | | 43 (25) | ||

| + | | 28 (10) | ||

| + | |- | ||

| + | | Theory and Formalisms | ||

| + | | 12 (58) | ||

| + | | 12 (16) | ||

| + | |- | ||

| + | | NLP Applications | ||

| + | | 60 (30) | ||

| + | | 41 (17) | ||

| + | |- | ||

| + | | Vision & Robotics | ||

| + | | 41 (12) | ||

| + | | 22 (36) | ||

| + | |- | ||

| + | | Phonology | ||

| + | | 24 (33) | ||

| + | | 24 (25) | ||

| + | |} | ||

== Conference tracks == | == Conference tracks == | ||

| Line 334: | Line 376: | ||

== Recruiting ACs and Reviewers == | == Recruiting ACs and Reviewers == | ||

| − | Similar to what other PCs have done in the past, we distributed a wide call for volunteers to recruit the Area Chairs and Reviewers. All volunteers were scanned by PCs and assigned ACs/reviewer roles, and each area was seeded with a set of volunteer reviewers. Area Chairs then filled out the remainder of their respective committees. There were 25 specific areas + one for “Mixed Topics” and at least 2 ACs per topic area. After the abstract deadline, we added more ACs to teams with larger than predicted submissions . Our goal was to ensure greater diversity by including in each area some participants who may not have been previously involved, and therefore would not have been invited if the committees were built from lists of previous reviewers. 390 of 1321 reviewers were reviewing for NAACL for the first time. | + | Similar to what other PCs have done in the past, we distributed a wide call for volunteers to recruit the Area Chairs and Reviewers. All volunteers were scanned by PCs and assigned ACs/reviewer roles, and each area was seeded with a set of volunteer reviewers. Area Chairs then filled out the remainder of their respective committees. There were 25 specific areas + one for “Mixed Topics” and at least 2 ACs per topic area. After the abstract deadline, we added more ACs to teams with larger than predicted submissions . Our goal was to ensure greater diversity by including in each area some participants who may not have been previously involved, and therefore would not have been invited if the committees were built from lists of previous reviewers. 390 of 1321 reviewers were reviewing for NAACL for the first time. 40 of the 94 area chairs were first time area chairs for NAACL. |

=== Breakdown by gender for ACs and reviewers === | === Breakdown by gender for ACs and reviewers === | ||

| Line 357: | Line 399: | ||

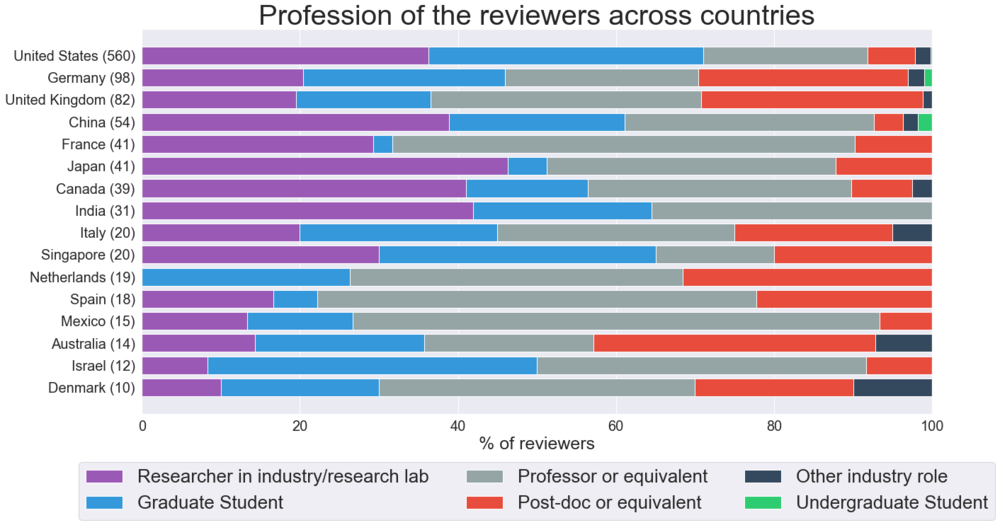

=== Breakdown by employment category and country for ACs and reviewers === | === Breakdown by employment category and country for ACs and reviewers === | ||

| − | [[File:Profession reviewers.png|1000px | + | [[File:Profession reviewers.png|1000px|]] |

| − | |||

== Abstract Submissions == | == Abstract Submissions == | ||

| Line 388: | Line 429: | ||

We used a hybrid reviewing form, combining elements of the EMNLP 2018, NAACL-HLT 2018 and ACL 2018, with a 6-point overall rating scale so there was no “easy out” mid-point, distinct sections of summary, strengths and weaknesses to make easy to scan and compare relevant sections, and the minimum length feature of START enabled to elicit more consistently substantive content for the authors. This received excellent feedback from authors but which some reviewers complained about and others outright circumvented via html tags or repeated filler content. | We used a hybrid reviewing form, combining elements of the EMNLP 2018, NAACL-HLT 2018 and ACL 2018, with a 6-point overall rating scale so there was no “easy out” mid-point, distinct sections of summary, strengths and weaknesses to make easy to scan and compare relevant sections, and the minimum length feature of START enabled to elicit more consistently substantive content for the authors. This received excellent feedback from authors but which some reviewers complained about and others outright circumvented via html tags or repeated filler content. | ||

| − | + | ||

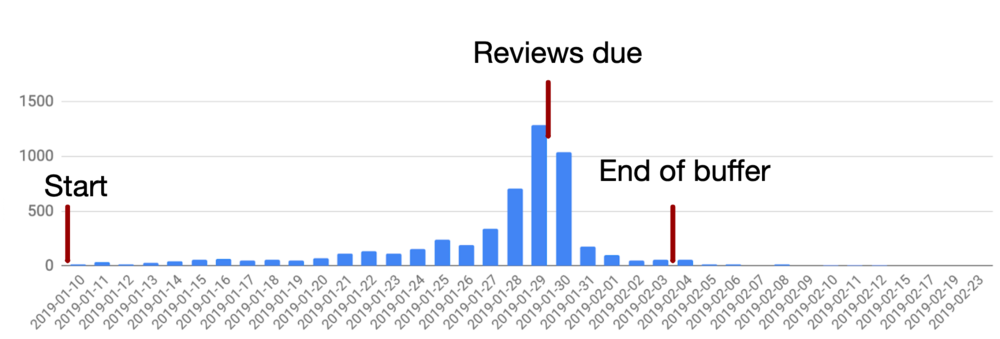

| + | The graph bellow shows the timeline of first review submissions. | ||

| + | [[File:review_submissions.png|1000px|]] | ||

| + | |||

| + | Regarding the increasing challenge in preserving double blind review, PCs found that the papers whose authors the reviewers could guess were more likely to receive an overall score of 5 or 6, compared to papers whose authors were not identified by the reviewers. | ||

| + | |||

No author response: due to time constraints and finding from NAACL 2018 that it had little impact. Authors were unhappy about this, they really want to be able to respond to reviews. | No author response: due to time constraints and finding from NAACL 2018 that it had little impact. Authors were unhappy about this, they really want to be able to respond to reviews. | ||

| − | |||

| − | |||

| − | |||

| − | + | Did not repeat Test of Time awards from 2018--should this be something that the NAACL/ACL board runs, and/or be done every few years. [There were ToT awards at ACL 2019 and it looks like this will be happening at ACLs.] | |

= Best paper awards = | = Best paper awards = | ||

| Line 407: | Line 450: | ||

* Best Long Paper | * Best Long Paper | ||

| − | : BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding <br /> | + | : ''BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding'' <br /> |

: Jacob Devlin, Ming-Wei Chang, Kenton Lee and Kristina Toutanova | : Jacob Devlin, Ming-Wei Chang, Kenton Lee and Kristina Toutanova | ||

* Best Short Paper | * Best Short Paper | ||

| − | : Probing the Need for Visual Context in Multimodal Machine Translation <br /> | + | : ''Probing the Need for Visual Context in Multimodal Machine Translation'' <br /> |

: Ozan Caglayan, Pranava Madhyastha, Lucia Specia and Loïc Barrault | : Ozan Caglayan, Pranava Madhyastha, Lucia Specia and Loïc Barrault | ||

* Best Resource Paper | * Best Resource Paper | ||

| − | : CommonsenseQA: A Question Answering Challenge Targeting Commonsense Knowledge <br /> | + | : ''CommonsenseQA: A Question Answering Challenge Targeting Commonsense Knowledge'' <br /> |

: Alon Talmor, Jonathan Herzig, Nicholas Lourie and Jonathan Berant | : Alon Talmor, Jonathan Herzig, Nicholas Lourie and Jonathan Berant | ||

| Line 426: | Line 469: | ||

= Timeline = | = Timeline = | ||

| − | + | * Dec. 10th, 2018: Paper submission deadline (both long and short) | |

| + | * Dec. 14-17: Area chairs check papers | ||

| + | * Dec 20-Jan 2, 2019: Paper bidding window | ||

| + | * Jan. 3-8: Area chairs review assignment | ||

| + | * Jan. 9: Review period starts | ||

| + | * Jan. 29: Reviews due (around 3 weeks for reviewing) | ||

| + | * Jan. 30-Feb 3: Area chairs chase late reviewers add emergency reviewers | ||

| + | * Feb 4th-7: Area chairs discussion period | ||

| + | * Feb 8th-12: Area chairs determine recommendations and enter meta reviews | ||

| + | * Feb 13-21: Final decisions made | ||

| + | * Feb 22: Decisions sent to authors | ||

| + | * March 11: Presentation format recommendations | ||

| + | * March 18: ACs send best reviewers list | ||

| + | * March 20-April 8: Best paper selection period | ||

= Issues and recommendations = | = Issues and recommendations = | ||

| − | + | ;Maintaining anonymity | |

| + | : Wording of ACL policies invites reinterpretation (e.g. "are asked not to publicize [the paper] further during the anonymity period – the submitted paper should be as anonymous as possible.") | ||

| + | : Open review from overlapping conferences requires Chairs to make ad hoc decisions about whether de-anonymization as part of the review process does or does not violate ACL policies | ||

| + | : Expectation for transparency at odds with confidential review process (community wants to discuss all aspects of review process in social media) | ||

| + | |||

| + | ;Higher volume of papers & participants is straining our infrastructure | ||

| + | : START tools struggle to support this volume of papers | ||

| + | : Reviewer overload/burnout | ||

| + | : Challenges in coordinating logistics with the venue (A/V, coffee, recruiting lunch, video release forms, random people jumping into banquet buses) in the absence of a Local Chair | ||

| + | |||

| + | ; Possible solutions | ||

| + | : Look into sharing reviews for rejected papers with next conferences | ||

| + | : Revisit using Open Review for *ACL | ||

| + | : Strict policy on double submissions (like EMNLP) | ||

| − | + | ; Other recommendations | |

| − | + | : Do not print handbooks for all participants, have a smaller number available by request. Post-conference survey indicated that a majority of participants used only the conference app. | |

| − | + | : Have a Local Arrangements Chair for NAACL | |

| − | + | : Revise ACL anonymity and submission policies to remove alternate interpretations and thereby spare PCs time-consuming negotiations with authors | |

| − | START tools | + | : Consider moving NAACL to spring so that *ACL timelines are less compressed and NAACL reviewing does not fall over end-of-year holidays |

| − | + | : More automation of format checks in START & better documentation of the ones that are already there (obscure and buried flags) to ease the desk reject process | |

| − | + | : Allow extension of START COI tools to allow authors to list reviewers who should not be assigned to their paper | |

| − | |||

| − | |||

Latest revision as of 15:44, 6 August 2019

Program Committee

Organising Committee

General Chair

Jill Burstein, Educational Testing Service, USA

Program Co-Chairs

Christy Doran, Interactions LLC, USA

Thamar Solorio, University of Houston, USA

Industry Track Co-chairs

Rohit Kumar

Anastassia Loukina, Educational Testing Service, USA

Michelle Morales, IBM, USA

Workshop Co-Chairs

Smaranda Muresan, Columbia University, USA

Swapna Somasundaran, Educational Testing Service, USA

Elena Volodina, University of Gothenburg, Sweden

Tutorial Co-Chairs

Anoop Sarkar, Simon Fraser University, Canada

Michael Strube, Heidelberg Institute for Theoretical Studies, Germany

System Demonstration Co-Chairs

Waleed Ammar, Allen Institute for AI, USA

Annie Louis, University of Edinburgh, Scotland

Nasrin Mostafazadeh, Elemental Cognition, USA

Publication Co-Chairs

Stephanie Lukin, U.S. Army Research Laboratory

Alla Roskovskaya, City University of New York, USA

Handbook Chair

Steve DeNeefe, SDL, USA

Student Research Workshop Co-Chairs & Faculty Advisors

Sudipta Kar, University of Houston, USA

Farah Nadeem, University of Washington, USA

Laura Wendlandt, University of Michigan, USA

Greg Durrett, University of Texas at Austin, USA

Na-Rae Han, University of Pittsburgh, USA

Diversity & Inclusion Co-Chairs

Jason Eisner, Johns Hopkins University, USA

Natalie Schluter, IT University, Copenhagen, Denmark

Publicity & Social Media Co-Chairs

Yuval Pinter, Georgia Institute of Technology, USA

Rachael Tatman, Kaggle, USA

Website & Conference App Chair

Nitin Madnani, Educational Testing Service, USA

Student Volunteer Coordinator

Lu Wang, Northeastern University, USA

Video Chair

Spencer Whitehead, Rensselaer Polytechnic Institute, USA

Remote Presentation Co-Chairs

Meg Mitchell, Google, USA

Abhinav Misra, Educational Testing Service, USA

Local Sponsorship Co-Chairs

Chris Callison-Burch, University of Pennsylvania, USA

Tonya Custis, Thomson Reuters, USA

Local Organization

Priscilla Rasmussen, ACL

Area Chairs

Biomedical NLP & Clinical Text Processing

Bridget McInnes, Virginia Commonwealth University, USA

Byron C. Wallace, Northeastern University, USA

Cognitive Modeling – Psycholinguistics

Serguei Pakhomov, University of Minnesota, USA

Emily Prud’hommeaux, Boston College, USA

Dialog and Interactive systems

Nobuhiro Kaji, Yahoo Japan Corporation, Japan

Zornitsa Kozareva, Google, USA

Sujith Ravi, Google, USA

Michael White, Ohio State University, USA

Discourse and Pragmatics

Ruihong Huang, Texas A&M University, USA

Vincent Ng, University of Texas at Dallas, USA

Ethics, Bias and Fairness

Saif Mohammad, National Research Council Canada, Canada

Mark Yatskar, University of Washington, USA

Generation

He He, Amazon Web Services, USA

Wei Xu, Ohio State University, USA

Yue Zhang, Westlake University, China

Information Extraction

Heng Ji, Rensselaer Polytechnic Institute, USA

David McClosky, Google, USA

Gerard de Melo, Rutgers University, USA

Timothy Miller, Boston Children’s Hospital, USA

Mo Yu, IBM Research, USA

Information Retrieval

Sumit Bhatia, IBM’s India Research Laboratory, India

Dina Demner-Fushman, US National Library of Medicine, USA

Machine Learning for NLP

Ryan Cotterell, Johns Hopkins University, USA

Daichi Mochihashi, The Institute of Statistical Mathematics, Japan

Marie-Francine Moens, KU Leuven, Belgium

Vikram Ramanarayanan, Educational Testing Service, USA

Anna Rumshisky, University of Massachusetts Lowell, USA

Natalie Schluter, IT University of Copenhagen, Denmark

Machine Translation

Rafael E. Banchs, HLT Institute for Infocomm Research A*Star, Singapore

Daniel Cer, Google Research, USA

Haitao Mi, Ant Financial US, USA

Preslav Nakov, Qatar Computing Research Institute, Qatar

Zhaopeng Tu, Tencent, China

Mixed Topics

Ion Androutsopoulos, Athens Univ. of Economics and Business, Greece

Steven Bethard, University of Arizona, USA

Multilingualism, Cross lingual resources

Željko Agić, IT University of Copenhagen, Denmark

Ekaterina Shutova, University of Amsterdam, Netherlands

Yulia Tsvetkov, Carnegie Mellon University, USA

Ivan Vulic, Cambridge University, UK

NLP Applications

T. J. Hazen, Microsoft, USA

Alessandro Moschitti, Amazon, USA

Shimei Pan, University of Maryland Baltimore County, USA

Wenpeng Yin, University of Pennsylvania, USA

Su-Youn Yoon, Educational Testing Service, USA

Phonology, Morphology and Word Segmentation

Ramy Eskander, Columbia University, USA

Grzegorz Kondrak, University of Alberta, Canada

Question Answering

Eduardo Blanco, University of North Texas, USA

Christos Christodoulopoulos, Amazon, USA

Asif Ekbal, Indian Institute of Technology Patna, India

Yansong Feng, Peking University, China

Tim Rocktäschel, Facebook, USA

Avi Sil, IBM Research, USA

Resources and Evaluation

Torsten Zesch, University of Duisburg-Essen, Germany

Tristan Miller, Technische Universität Darmstadt, Germany

Semantics

Ebrahim Bagheri, Ryerson University, Canada

Samuel Bowman, New York University, USA

Matt Gardner, Allen Institute for Artificial Intelligence, USA

Kevin Gimpel, Toyota Technological Institute at Chicago, USA

Daisuke Kawahara, Kyoto University, Japan

Carlos Ramisch, Aix Marseille University, France

Sentiment Analysis

Isabelle Augenstein, University of Copenhagen, Denmark

Wai Lam, The Chinese University of Hong Kong, Hong Kong

Soujanya Poria, Nanyang Technological University, Singapore

Ivan Vladimir Meza Ruiz, UNAM, Mexico

Social Media

Dan Goldwasser, Purdue University, USA

Michael J. Paul, University of Colorado Boulder, USA

Sara Rosenthal, IBM Research, USA

Paolo Rosso, Universitat Politècnica de València, Spain

Chenhao Tan, University of Colorado Boulder, USA

Xiaodan Zhu, Queen’s University, Canada

Speech

Keelan Evanini, Educational Testing Service, USA

Yang Liu, LAIX Inc, USA

Style

Beata Beigman Klebanov, Educational Testing Service, USA

Manuel Montes, Instituto Nacional de Astrofísica, Óptica y Electrónica, Mexico

Joel Tetreault, Grammarly, USA

Summarization

Mohit Bansal, University of North Carolina Chapel Hill, USA

Fei Liu, University of Central Florida, USA

Ani Nenkova, University of Pennsylvania, USA

Tagging, Chunking, Syntax and Parsing

Adam Lopez, University of Edinburgh, Scotland

Roi Reichart, Technion – Israel Institute of Technology, Israel

Agata Savary, University of Tours, France

Guillaume Wisniewski, Université Paris Sud, France

Text Mining

Kai-Wei Chang, University of California Los Angeles, USA

Anna Feldman, Montclair State University, USA

Shervin Malmasi, Harvard Medical School, USA

Verónica Pérez-Rosas, University of Michigan, USA

Kevin Small, Amazon, USA

Diyi Yang, Carnegie Mellon University, USA

Theory and Formalisms

Valia Kordoni, Humboldt University Berlin, Germany

Andreas Maletti, University of Stuttgart, Germany

Vision, Robotics and other grounding

Francis Ferraro, University of Maryland Baltimore County, USA

Vicente Ordóñez, University of Virginia, USA

William Yang Wang, University of California Santa Barbara, USA

Main Innovations

- Conference theme

- The CFP made a special request for papers addressing the tension between data privacy and model bias in NLP, including: using NLP for surveillance and profiling, balancing the need for broadly representative data sets with protections for individuals, understanding and addressing model bias, and where bias correction becomes censorship. The three invited speakers were all selected to tie into the theme, and a Best Thematic Paper was selected.

- Land Acknowledgement

- Similar to what has been done in recent *CL conferences, the opening session included a land acknowledgement to recognize and honor Indigeneous Peoples.

- Video Poster Highlights

- This year included one minute slides with pre recorded audio that showcase the posters to be presented that day. The goal was to provide more visibility to posters. These were shown during the welcome reception, breakfast and breaks. A/V failures the first day of the conference have made it hard to assess effectiveness.

- Remote Presentations

- Remote presentations were supported for both talks and posters, via an application form to the committee.

- The new Diversity & Inclusion team piloted a number of new initiatives including:

- additional questions on the registration form to identify any accommodations

- preferred pronouns (optionally) added to badges

- I’m hiring/I’m looking for a job/I’m new badge stickers

- Link to D&I report will be included when it is available.

- Two-stage Submissions

- This year we followed a two-stage submission process, in which abstracts were due one week before full papers. Our goal was to get a head start on assigning papers to areas, and recruiting additional area chairs where submissions exceeded our predicted volume.

- Pro: early response to areas with larger than predicted number of papers

- Con: too much overhead for PCs, as authors repeatedly contacted chairs to request that papers be moved between long and short, or asked about changes to authorship, titles and abstracts.

- Full papers available for bidding: reviewers loved it, authors did not

- Student Research Papers

- talks and posters from the SRW were integrated into the main conference program. Positive feedback was received about this, better experience for students.

Submissions rates and distributions

Authors were permitted to switch format (long/short) when they submitted the full papers, so the total in the chart below uses 2271 as the total number of submissions, discounting the 103 that never submitted a full paper in the second phase. Seventy nine papers were desk-rejected due to anonymity, formatting, or dual-submission violations; 456 papers withdrawn prior to acceptance decisions being sent, although some were withdrawn part way through the review process; and an additional 11 papers were withdrawn after acceptance notifications had been sent. Keeping the acceptance rate consistent with past years meant 5 parallel tracks were needed to fit more papers into 3 days--as the conference grows, decisions will have to be made about continuing to add more tracks, adding more days to the main conference, or lowering the acceptance rate. The overall technical program consisted of 423 main conference papers, plus 9 TACL papers, 23 SRW papers, 28 Industry papers, and 24 demos. The TACL and SRW papers were integrated into the program, and marked SRW or TACL accordingly.

| Long | Short | Total | TACL | |

|---|---|---|---|---|

| Reviewed | 1067 | 666 | 1733 | |

| Accepted as talk | 140 | 72 | 212 | 4 |

| Accepted as poster | 141 | 70 | 211 | 5 |

| Total Accepted | 281 (26.3%) | 142 (21.3%) | 423 (24.4%) | 9 |

Detailed statistics by area

| Area | Long (%) | Short (%) |

|---|---|---|

| Bio and clinical NLP | 7 (57) | 28 (17) |

| Question Answering | 73 (36) | 41 (17) |

| Cognitive modeling | 24 (29) | 14 (14) |

| Resources and Evaluation | 33 (27) | 20 (20) |

| Dialog and Interactive systems | 64 (20) | 18 (27) |

| Semantics | 80 (13) | 42 (11) |

| Discourse and Pragmatics | 38 (21) | 11 (36) |

| Sentiment Analysis | 32 (28) | 40 (20) |

| Ethics, Bias and Fairness | 16 (25) | 12 (50) |

| Social Media | 44 (18) | 41 (36) |

| Generation | 46 (14) | 19 (23) |

| Speech | 19 (31) | 9 (33) |

| Information Extraction | 46 (28) | 16 (12) |

| Style | 24 ( (25) | 16 (25) |

| Information Retrieval | 22 (22) | 13 (30) |

| Summarization | 22 (27) | 28 (28) |

| Machine Learning for NLP | 100 (29) | 22 (22) |

| Syntax | 36 (52) | 54 (13) |

| Machine Translation | 49 (30) | 53 (18) |

| Text Mining | 101 (18) | 29 (24) |

| Multilingual NLP | 43 (25) | 28 (10) |

| Theory and Formalisms | 12 (58) | 12 (16) |

| NLP Applications | 60 (30) | 41 (17) |

| Vision & Robotics | 41 (12) | 22 (36) |

| Phonology | 24 (33) | 24 (25) |

Conference tracks

The Industry Track, in its second year, had 28 accepted papers (10 oral and 18 posters, acceptance rate: ~28%), and ran a lunchtime Careers in Industry panel which was very well attended. Panelists were Judith Klavans, Yunyao Li, Owen Rambow, and Joel Tetreault and the moderator was Phil Resnik.

The Student Research Workshop had 23 accepted papers, distributed throughout the conference, and 19 submissions received pre-submission mentoring. For the first time, both archival and non-archival submissions were offered, meaning that authors who opted for the non-archival version will not have a paper available in the archive and are free to publish elsewhere.

There were 25 accepted Demos, which were spread across several of the poster sessions.

Reviewing

Recruiting ACs and Reviewers

Similar to what other PCs have done in the past, we distributed a wide call for volunteers to recruit the Area Chairs and Reviewers. All volunteers were scanned by PCs and assigned ACs/reviewer roles, and each area was seeded with a set of volunteer reviewers. Area Chairs then filled out the remainder of their respective committees. There were 25 specific areas + one for “Mixed Topics” and at least 2 ACs per topic area. After the abstract deadline, we added more ACs to teams with larger than predicted submissions . Our goal was to ensure greater diversity by including in each area some participants who may not have been previously involved, and therefore would not have been invited if the committees were built from lists of previous reviewers. 390 of 1321 reviewers were reviewing for NAACL for the first time. 40 of the 94 area chairs were first time area chairs for NAACL.

Breakdown by gender for ACs and reviewers

| Response | Area Chair | Reviewer |

|---|---|---|

| Female | 24.4 | 25.2 |

| Male | 73 | 71.7 |

| Prefer not to answer | 2.6 | 3.1 |

Breakdown by employment category and country for ACs and reviewers

Abstract Submissions

This year we followed a two-stage submission process, in which abstracts were due one week before full papers. Our goal was to get a head start on assigning papers to areas, and recruiting additional area chairs where submissions exceeded our predicted volume. Relative to the projected numbers from NAACL-HLT 2018, several areas received a higher-than-predicted number of submissions: Biomedical/Clinical, Dialogue and Vision. Text Mining ended up with the overall largest number of submissions.

Handling desk rejects

Our process for identifying desk rejects has been very similar to what other PCs have done in the past. First, the area chairs check their batch of assigned papers and report any issues to us. As the reviewing begins, reviewers may also identify issues that were not caught by ACs, which they flag up to ACs or directly to PCs. We then review each of these issues and make a final decision, to ensure that papers are handled consistently. This means each paper is reviewed for non-content issues by at least three people. The major categories for desk rejects are:

- Violations to the dual submission policy specified in the call for papers

- Violations to the anonymity policy as specified in the call for papers

- “Format cheating” submissions not following the clearly stated format and style guidelines either in LaTeX or Word (thanks to Emily and Leon for introducing the concept).

As of February 7th, out of 2378 submissions, there were 44 rejections for format issues, 24 for anonymity violations, and 11 for dual submissions. This means that a total of 3% of the submissions were desk-rejected.

Review process

Assignment to areas used the initial START assignments, followed by load-rebalancing and conflict resolution using keywords and manual inspection of the paper. Authors were blind to Area Chairs

Review assignment

- Criteria: Fairness, Expertise, Interest

- Method: area chair expertise + Toronto Paper Matching System (TPMS) + reviewer bids + manual tweaking

- Many reviewers did not have TPMS profiles

Goal was no more than 5 papers per reviewer, some reviewers agreed to handle more. First-round accept/reject suggestions were made by area chairs. Final decisions were made by the program chairs.

We used a hybrid reviewing form, combining elements of the EMNLP 2018, NAACL-HLT 2018 and ACL 2018, with a 6-point overall rating scale so there was no “easy out” mid-point, distinct sections of summary, strengths and weaknesses to make easy to scan and compare relevant sections, and the minimum length feature of START enabled to elicit more consistently substantive content for the authors. This received excellent feedback from authors but which some reviewers complained about and others outright circumvented via html tags or repeated filler content.

The graph bellow shows the timeline of first review submissions.

Regarding the increasing challenge in preserving double blind review, PCs found that the papers whose authors the reviewers could guess were more likely to receive an overall score of 5 or 6, compared to papers whose authors were not identified by the reviewers.

No author response: due to time constraints and finding from NAACL 2018 that it had little impact. Authors were unhappy about this, they really want to be able to respond to reviews.

Did not repeat Test of Time awards from 2018--should this be something that the NAACL/ACL board runs, and/or be done every few years. [There were ToT awards at ACL 2019 and it looks like this will be happening at ACLs.]

Best paper awards

- Best Thematic Paper:

- What’s in a Name? Reducing Bias in Bios Without Access to Protected Attributes

- Alexey Romanov, Maria De-Arteaga, Hanna Wallach, Jennifer Chayes, Christian Borgs, Alexandra Chouldechova, Sahin Geyik, Krishnaram Kenthapadi, Anna Rumshisky and Adam Kalai

- Best Explainable NLP Paper:

- CNM: An Interpretable Complex-valued Network for Matching

- Qiuchi Li, Benyou Wang and Massimo Melucci

- Best Long Paper

- BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding

- Jacob Devlin, Ming-Wei Chang, Kenton Lee and Kristina Toutanova

- Best Short Paper

- Probing the Need for Visual Context in Multimodal Machine Translation

- Ozan Caglayan, Pranava Madhyastha, Lucia Specia and Loïc Barrault

- Best Resource Paper

- CommonsenseQA: A Question Answering Challenge Targeting Commonsense Knowledge

- Alon Talmor, Jonathan Herzig, Nicholas Lourie and Jonathan Berant

Presentations

- Long-paper presentations: 22 sessions in total (4 sessions in parallel), duration: 15 minutes for talk + 3 minutes for questions + 2 dedicated Industry Track sessions

- Short-paper presentations: 12 sessions in total (4 sessions in parallel), duration: 12 minutes for talk + 3 minutes for questions

- Best-paper presentation: 1 session at the end of the last day

- Posters: 8 sessions in total (1 session in parallel with every non-plenary talk session) + 1 dedicated Industry Poster session

Timeline

- Dec. 10th, 2018: Paper submission deadline (both long and short)

- Dec. 14-17: Area chairs check papers

- Dec 20-Jan 2, 2019: Paper bidding window

- Jan. 3-8: Area chairs review assignment

- Jan. 9: Review period starts

- Jan. 29: Reviews due (around 3 weeks for reviewing)

- Jan. 30-Feb 3: Area chairs chase late reviewers add emergency reviewers

- Feb 4th-7: Area chairs discussion period

- Feb 8th-12: Area chairs determine recommendations and enter meta reviews

- Feb 13-21: Final decisions made

- Feb 22: Decisions sent to authors

- March 11: Presentation format recommendations

- March 18: ACs send best reviewers list

- March 20-April 8: Best paper selection period

Issues and recommendations

- Maintaining anonymity

- Wording of ACL policies invites reinterpretation (e.g. "are asked not to publicize [the paper] further during the anonymity period – the submitted paper should be as anonymous as possible.")

- Open review from overlapping conferences requires Chairs to make ad hoc decisions about whether de-anonymization as part of the review process does or does not violate ACL policies

- Expectation for transparency at odds with confidential review process (community wants to discuss all aspects of review process in social media)

- Higher volume of papers & participants is straining our infrastructure

- START tools struggle to support this volume of papers

- Reviewer overload/burnout

- Challenges in coordinating logistics with the venue (A/V, coffee, recruiting lunch, video release forms, random people jumping into banquet buses) in the absence of a Local Chair

- Possible solutions

- Look into sharing reviews for rejected papers with next conferences

- Revisit using Open Review for *ACL

- Strict policy on double submissions (like EMNLP)

- Other recommendations

- Do not print handbooks for all participants, have a smaller number available by request. Post-conference survey indicated that a majority of participants used only the conference app.

- Have a Local Arrangements Chair for NAACL

- Revise ACL anonymity and submission policies to remove alternate interpretations and thereby spare PCs time-consuming negotiations with authors

- Consider moving NAACL to spring so that *ACL timelines are less compressed and NAACL reviewing does not fall over end-of-year holidays

- More automation of format checks in START & better documentation of the ones that are already there (obscure and buried flags) to ease the desk reject process

- Allow extension of START COI tools to allow authors to list reviewers who should not be assigned to their paper