Difference between revisions of "2022Q1 Reports: ACL Rolling Review"

AmandaStent (talk | contribs) (Created page with "# ARR: Looking Back How did ARR come to exist? In the fall of 2019 and spring of 2020, the ACL Exec convened a committee to discuss the future of peer review for *ACL venues....") |

AmandaStent (talk | contribs) |

||

| (15 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

| − | + | == ARR: Looking Back == | |

How did ARR come to exist? In the fall of 2019 and spring of 2020, the ACL Exec convened a committee to discuss the future of peer review for *ACL venues. This committee consisted primarily of recent program chairs of big *ACL conferences, and was tasked with considering how to handle “the rapid growth of submissions”. The core problems were stated as following: | How did ARR come to exist? In the fall of 2019 and spring of 2020, the ACL Exec convened a committee to discuss the future of peer review for *ACL venues. This committee consisted primarily of recent program chairs of big *ACL conferences, and was tasked with considering how to handle “the rapid growth of submissions”. The core problems were stated as following: | ||

| − | + | * There are many submissions to major NLP conferences each year; the rate of increase at the time was exponential | |

| − | + | * Low acceptance rates at major NLP conferences mean that we suspected that many submissions get resubmitted over and over | |

In addition, arXiv increasingly interferes with the peer review process, with some authors complaining of bias in favor of arXiv preprints and authors complaining of the slowness of peer review and risks due to low acceptance rates at major NLP conferences. | In addition, arXiv increasingly interferes with the peer review process, with some authors complaining of bias in favor of arXiv preprints and authors complaining of the slowness of peer review and risks due to low acceptance rates at major NLP conferences. | ||

| Line 12: | Line 12: | ||

To the core goal of ARR, cutting review load and increasing review consistency through R&R: | To the core goal of ARR, cutting review load and increasing review consistency through R&R: | ||

| − | | | 2020 | + | {| class="wikitable" |

| − | |- | + | ! style="text-align:left;"| Venue |

| − | | ACL | 3429 | 3350 | | | + | ! 2020 |

| − | | NAACL | - | 1797 | | | + | ! 2021 |

| − | | ARR | - | 3939 (through November) | 2093 (incl December 2021) | | + | ! 2022 |

| − | | ARR resubmissions | - | 221 (through November | 632 (December 2021/January 2022) | | + | |- |

| + | | ACL | ||

| + | | 3429 | ||

| + | | 3350 | ||

| + | | - | ||

| + | |- | ||

| + | | NAACL | ||

| + | | - | ||

| + | | 1797 | ||

| + | | - | ||

| + | |- | ||

| + | | ARR | ||

| + | | - | ||

| + | | 3939 (through November) | ||

| + | | 2093 (incl December 2021) | ||

| + | |- | ||

| + | | ARR resubmissions | ||

| + | | - | ||

| + | | 221 (through November) | ||

| + | | 632 (December 2021/January 2022) | ||

| + | |} | ||

853 submissions that would, without ARR, almost certainly have gone to new reviewers who would have had to write new reviews not knowing about the previous submissions/reviews, instead went to (in the majority of cases the same, but sometimes new) reviewers who had access to the previous reviews. It is important to stress that these numbers portray an incomplete picture of potential ARR gains, as ARR did not yet go through one complete year (that is, one full “conference season”). Once we reach the fall report, we will have a clearer picture regarding the overall reduction of the reviewing effort as we will know how many papers rejected from ACL/NAACL 2022 were re-committed to ACL-IJCNLP, EMNLP 2022 or another *ACL 2022 venue (*ACL workshops) without incurring new reviews. | 853 submissions that would, without ARR, almost certainly have gone to new reviewers who would have had to write new reviews not knowing about the previous submissions/reviews, instead went to (in the majority of cases the same, but sometimes new) reviewers who had access to the previous reviews. It is important to stress that these numbers portray an incomplete picture of potential ARR gains, as ARR did not yet go through one complete year (that is, one full “conference season”). Once we reach the fall report, we will have a clearer picture regarding the overall reduction of the reviewing effort as we will know how many papers rejected from ACL/NAACL 2022 were re-committed to ACL-IJCNLP, EMNLP 2022 or another *ACL 2022 venue (*ACL workshops) without incurring new reviews. | ||

| Line 24: | Line 44: | ||

To the point about arXiv: | To the point about arXiv: | ||

| − | + | * ARR’s more frequent submission cycles allow authors to get feedback more quickly | |

| − | + | * Almost 900 ARR submissions to-date opted in to be hosted as anonymous preprints | |

| − | + | == ARR Today: Stats == | |

| − | + | === Submissions and resubmissions === | |

Since the first deadline in May 2021, ARR has run 10 cycles and received a total of more than 6000 submissions including more than 800 resubmissions. Full statistics can be found on our [website](http://stats.aclrollingreview.org/). | Since the first deadline in May 2021, ARR has run 10 cycles and received a total of more than 6000 submissions including more than 800 resubmissions. Full statistics can be found on our [website](http://stats.aclrollingreview.org/). | ||

| − | + | === Authors and reviewers === | |

A total of 13801 unique OpenReview profiles have been associated with ARR to date, across authors, reviewers, action editors, tech team, and program chairs. | A total of 13801 unique OpenReview profiles have been associated with ARR to date, across authors, reviewers, action editors, tech team, and program chairs. | ||

| − | + | ==== Authors, author experience and authors as reviewers ==== | |

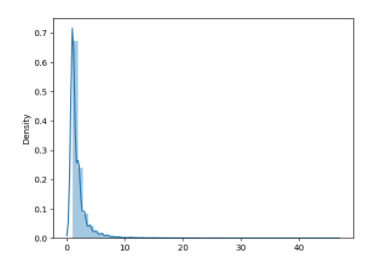

| − | 11787 unique author ids are associated with ARR submissions. The distribution of number of submissions across authors is shown in the chart below (5th percentile: 1; median: 1; mean: 1.95; | + | 11787 unique author ids are associated with ARR submissions. The distribution of number of submissions across authors is shown in the chart below (5th percentile: 1; median: 1; mean: 1.95; 95th percentile: 5; max: 46). |

| − | [ | + | [[File:Figure1.PNG|400px]] |

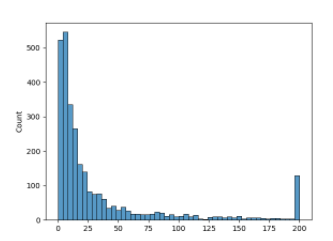

| − | We use Semantic Scholar profiles to estimate author and reviewer “seniority”. The chart below shows the number of previous publications from Semantic Scholar for authors who have provided Semantic Scholar IDs (5th percentile: 1; median: 12; mean: 33; | + | We use Semantic Scholar profiles to estimate author and reviewer “seniority”. The chart below shows the number of previous publications from Semantic Scholar for authors who have provided Semantic Scholar IDs (5th percentile: 1; median: 12; mean: 33; 95th percentile: 179). As expected, authors skew “junior”, with many having 0-5 publications - and of course, authors with no publications do not yet have Semantic Scholar profiles. |

| − | [ | + | [[File:Arrq122figure2.PNG|400px]] |

| − | + | ==== Reviewers, reviewer load and reviewer experience ==== | |

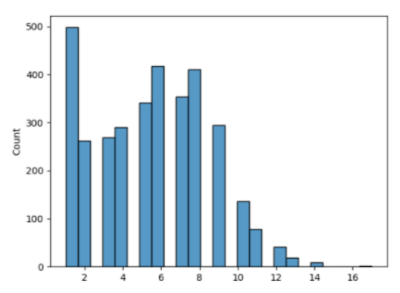

| − | 4391 unique reviewer ids are associated with ARR cycles to date. The distribution of number of reviews completed per reviewer who completed at least one review (including reviews of resubmissions) is shown in the chart below (5th percentile: 1; median: 6; mean: 5.47; | + | 4391 unique reviewer ids are associated with ARR cycles to date. The distribution of number of reviews completed per reviewer who completed at least one review (including reviews of resubmissions) is shown in the chart below (5th percentile: 1; median: 6; mean: 5.47; 95th percentile: 10; max: 17). This means that the cumulative load per reviewer across 10 months is approximately 6 papers on average. |

| − | [ | + | [[File:Arrq122figure3.PNG|400px]] |

| − | We use Semantic Scholar profiles to estimate author and reviewer “seniority”. The chart below shows the number of previous publications from Semantic Scholar for reviewers who have provided Semantic Scholar IDs (5th percentile: 3; median: 20; mean: 40.26; | + | We use Semantic Scholar profiles to estimate author and reviewer “seniority”. The chart below shows the number of previous publications from Semantic Scholar for reviewers who have provided Semantic Scholar IDs (5th percentile: 3; median: 20; mean: 40.26; 95th percentile: 170). Reviewers skew less junior than authors. When the ARR EiCs invite someone to review, either the person must have 5 publications with at least one in the past 5 years, or the person must be nominated by a senior action editor, action editor or EiC. However, the statistics here also include emergency reviewers, who may be more junior. |

| − | [ | + | [[File:Arrq122figure4.PNG|400px]] |

| − | + | ==== Action editors, action editor load and action editor experience ==== | |

| + | 531 unique action editor ids are associated with ARR cycles to date. The distribution of number of metareviews completed per action editor who completed at least one metareview (including metareviews of resubmissions) is shown in the chart below (5th percentile: 1; median: 10; mean: 8.67; 95th percentile: 14; max: 20). | ||

| − | + | [[File:Arrq122figure5.PNG|400px]] | |

| − | + | We use Semantic Scholar profiles to estimate author and reviewer “seniority”. The chart below shows the number of previous publications from Semantic Scholar for action editors who have provided Semantic Scholar IDs (5th percentile: 5; median: 54; mean: 71.39; 95th percentile: 200). Action editors skew less junior than reviewers. Our initial pool of action editors was drawn from area chairs and senior area chairs from recent *ACL conferences plus workshop organizers in recent years, balancing as possible for diversity by geography and affiliation type (industry/academia). | |

| − | + | [[File:Arrq122figure6.PNG|400px]] | |

| − | + | == ARR Today: Innovations == | |

| − | |||

| − | |||

| − | |||

With ARR (a single system and process for reviewing) we have been able to satisfy several of the short-term proposals for improving reviewing and roll out other initiatives, including: | With ARR (a single system and process for reviewing) we have been able to satisfy several of the short-term proposals for improving reviewing and roll out other initiatives, including: | ||

| − | + | * Anonymous preprints - hosting anonymous preprints for NLP papers through OpenReview. Almost 900 papers opted in to anonymous preprints through February 2022. | |

| − | + | * Revise and resubmit - authors may revise and resubmit their papers, which we try to match to the previous reviewers (if available) unless the authors request new reviewers. Over 850 ARR submissions through January are resubmissions. See our stats dashboard for month-by-month breakdowns of resubmissions and requests for reviewer reassignment. | |

| − | + | * Reviews as data (with Iryna Gurevych, Ilia Kuznetsov and Nils Dycke) - authors and reviewers may opt-in to provide reviews for research (see https://openreview.net/forum?id=28n-0nBPTch). | |

| − | + | * Reviewer mentoring (with Isabelle Augenstein and Anna Rogers) - providing mentoring to junior reviewers and training to all reviewers (see https://aclrollingreview.org/reviewertutorial). | |

| − | + | * Responsible NLP research (with the NAACL program and reproducibility chairs) - providing a responsible NLP research checklist for authors, and training about ethics and reproducibility in NLP research (see https://aclrollingreview.org/responsibleNLPresearch/). Since December, all ARR submissions have completed the checklist. We understand the NAACL 2022 reproducibility chairs are working on an analysis. | |

| − | + | * Ethics review (with the ACL ethics committee) - providing guidelines for ethics reviewing (https://aclrollingreview.org/ethicsreviewertutorial). | |

| − | + | * Review statistics - providing statistics on the *ACL review process over time (http://stats.aclrollingreview.org/). | |

| − | + | * Reviewer recognition (coming to the February cycle!) - providing recognition letters to reviewers about their service (https://aclrollingreview.org/recognition/). | |

| − | + | == ARR: Looking ahead == | |

| − | In 2022, ARR will be used by EMNLP and AACL-IJCNLP, INLG and SIGDIAL, and numerous *ACL workshops. | + | In 2022, ARR will be used by EMNLP and AACL-IJCNLP, INLG and SIGDIAL, and numerous *ACL workshops (https://aclrollingreview.org/dates). |

Future ARR initiatives will build on the current initiatives listed above. For example, we will soon roll out: | Future ARR initiatives will build on the current initiatives listed above. For example, we will soon roll out: | ||

| − | + | * Review quality assessment - we are starting an initiative where authors and AEs rate review quality. This data will feed into reviewer mentoring and reviewer recognition (identifying reviewers whose reviews are outstanding). | |

| − | + | * Submissions-over-time statistics - what happens to NLP papers? For example, how often is a typical paper resubmitted before it is committed to a venue and accepted at a venue? | |

| − | + | * Improved reviewer assignment - Graham Neubig continues to explore ways to improve reviewer assignment. | |

ARR has invited permanent Senior Action Editors, each responsible for a particular area of NLP. ARR is also inviting permanent ethics reviewers. | ARR has invited permanent Senior Action Editors, each responsible for a particular area of NLP. ARR is also inviting permanent ethics reviewers. | ||

| Line 94: | Line 112: | ||

The ACL exec will add more EiCs to ARR so that we can effectively distribute the load of managing each cycle as well as building out ethics reviewing, mentoring, and other ARR initiatives. The tech team will recruit a co-CTO. | The ACL exec will add more EiCs to ARR so that we can effectively distribute the load of managing each cycle as well as building out ethics reviewing, mentoring, and other ARR initiatives. The tech team will recruit a co-CTO. | ||

| − | + | Finally, by agreement from the ACL exec, ARR will move to a six week cycle effective April 15 2022. This will give 9 cycles per year, allow all involved to finish one cycle before the next deadline, eliminate the exceptionally troublesome "December 15th" cycle, and give reviewers more breathing room. | |

| + | |||

| + | == Concerns and Challenges == | ||

ARR is a paper-centric reviewing service, independent of any conference. It is not our job to provide acceptance/rejection decisions. Many authors feel unsure of this new process and understandably so. AEs and reviewers are also new to this rolling process and are concerned about their ongoing loads. Conference chairs are unsure of the new process and opt for hybrid mode instead of supporting ARR fully, again understandably so. However, overall this means we are constantly addressing concerns from multiple interests, some in conflict with each other (for example, authors who want immediate, in-depth reviews and reviewers who want low loads and lots of time to review). | ARR is a paper-centric reviewing service, independent of any conference. It is not our job to provide acceptance/rejection decisions. Many authors feel unsure of this new process and understandably so. AEs and reviewers are also new to this rolling process and are concerned about their ongoing loads. Conference chairs are unsure of the new process and opt for hybrid mode instead of supporting ARR fully, again understandably so. However, overall this means we are constantly addressing concerns from multiple interests, some in conflict with each other (for example, authors who want immediate, in-depth reviews and reviewers who want low loads and lots of time to review). | ||

| Line 100: | Line 120: | ||

Moving to a new submission and review infrastructure (OpenReview) has proved challenging. The OpenReview team are very collegial and helpful, but the structure of ARR is unusual for OpenReview. It lacked many of the features and functionalities we needed to implement ARR, including some of the basic automations that are needed such as reminder emails, automatic checking of resubmissions, etc. Consequently, our tech team has worked very hard to implement the various initiatives outlined above while we were rolling out ARR, including but not limited to AE assignment, reviewer assignment, etc. and to provide consistent conflict of interest detection and reviewer assignment. | Moving to a new submission and review infrastructure (OpenReview) has proved challenging. The OpenReview team are very collegial and helpful, but the structure of ARR is unusual for OpenReview. It lacked many of the features and functionalities we needed to implement ARR, including some of the basic automations that are needed such as reminder emails, automatic checking of resubmissions, etc. Consequently, our tech team has worked very hard to implement the various initiatives outlined above while we were rolling out ARR, including but not limited to AE assignment, reviewer assignment, etc. and to provide consistent conflict of interest detection and reviewer assignment. | ||

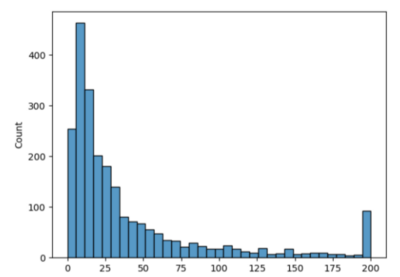

| − | In order for peer review to work, everyone must participate. Because the *ACL community skews young (see stats above), we must train junior reviewers and continue to involve more senior people in the reviewing process. To the extent that people think they can submit many papers, insist on high quality reviews, and yet provide no reviewing service themselves, any peer review system will fail. It is critical for experienced researchers to continue to participate in peer review. | + | In order for peer review to work, everyone must participate. Because the *ACL community skews young (see stats above), we must train junior reviewers and continue to involve more senior people in the reviewing process. To the extent that people think they can submit many papers, insist on high quality reviews, and yet provide no reviewing service themselves, any peer review system will fail. It is critical for experienced researchers to continue to participate in peer review. The chart below shows distribution of number of submissions by authors who are qualified to review for ARR (have at least 5 papers in relevant fields with the newest no more than 5 years old) and yet have provided 0 reviews to-date (x axis: number of people with y axis: number of submissions). |

| + | |||

| + | [[File:Arrq122figure7.PNG|250px]] | ||

Latest revision as of 14:58, 3 March 2022

ARR: Looking Back

How did ARR come to exist? In the fall of 2019 and spring of 2020, the ACL Exec convened a committee to discuss the future of peer review for *ACL venues. This committee consisted primarily of recent program chairs of big *ACL conferences, and was tasked with considering how to handle “the rapid growth of submissions”. The core problems were stated as following:

- There are many submissions to major NLP conferences each year; the rate of increase at the time was exponential

- Low acceptance rates at major NLP conferences mean that we suspected that many submissions get resubmitted over and over

In addition, arXiv increasingly interferes with the peer review process, with some authors complaining of bias in favor of arXiv preprints and authors complaining of the slowness of peer review and risks due to low acceptance rates at major NLP conferences.

The committee came up with two sets of proposals: https://www.aclweb.org/portal/content/short-term-reform-proposals-acl-reviewing (June 2020) and https://www.aclweb.org/portal/content/long-term-reform-proposal-acl-reviewing (June 2020).

To the core goal of ARR, cutting review load and increasing review consistency through R&R:

| Venue | 2020 | 2021 | 2022 |

|---|---|---|---|

| ACL | 3429 | 3350 | - |

| NAACL | - | 1797 | - |

| ARR | - | 3939 (through November) | 2093 (incl December 2021) |

| ARR resubmissions | - | 221 (through November) | 632 (December 2021/January 2022) |

853 submissions that would, without ARR, almost certainly have gone to new reviewers who would have had to write new reviews not knowing about the previous submissions/reviews, instead went to (in the majority of cases the same, but sometimes new) reviewers who had access to the previous reviews. It is important to stress that these numbers portray an incomplete picture of potential ARR gains, as ARR did not yet go through one complete year (that is, one full “conference season”). Once we reach the fall report, we will have a clearer picture regarding the overall reduction of the reviewing effort as we will know how many papers rejected from ACL/NAACL 2022 were re-committed to ACL-IJCNLP, EMNLP 2022 or another *ACL 2022 venue (*ACL workshops) without incurring new reviews.

We do note that another of the short-term proposals, Findings, has been widely taken up, also contributing to reduced reviewing load as Findings papers are no longer eligible for submission to ARR nor *ACL venues.

To the point about arXiv:

- ARR’s more frequent submission cycles allow authors to get feedback more quickly

- Almost 900 ARR submissions to-date opted in to be hosted as anonymous preprints

ARR Today: Stats

Submissions and resubmissions

Since the first deadline in May 2021, ARR has run 10 cycles and received a total of more than 6000 submissions including more than 800 resubmissions. Full statistics can be found on our [website](http://stats.aclrollingreview.org/).

Authors and reviewers

A total of 13801 unique OpenReview profiles have been associated with ARR to date, across authors, reviewers, action editors, tech team, and program chairs.

Authors, author experience and authors as reviewers

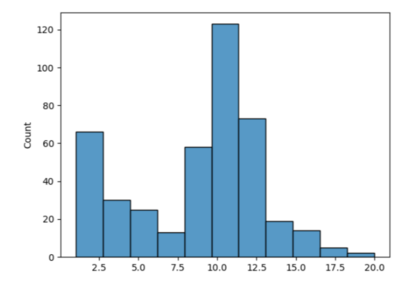

11787 unique author ids are associated with ARR submissions. The distribution of number of submissions across authors is shown in the chart below (5th percentile: 1; median: 1; mean: 1.95; 95th percentile: 5; max: 46).

We use Semantic Scholar profiles to estimate author and reviewer “seniority”. The chart below shows the number of previous publications from Semantic Scholar for authors who have provided Semantic Scholar IDs (5th percentile: 1; median: 12; mean: 33; 95th percentile: 179). As expected, authors skew “junior”, with many having 0-5 publications - and of course, authors with no publications do not yet have Semantic Scholar profiles.

Reviewers, reviewer load and reviewer experience

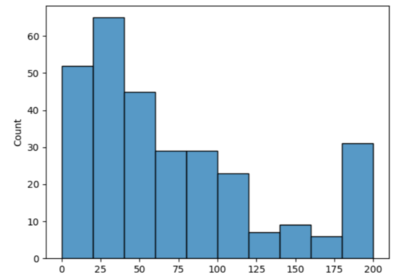

4391 unique reviewer ids are associated with ARR cycles to date. The distribution of number of reviews completed per reviewer who completed at least one review (including reviews of resubmissions) is shown in the chart below (5th percentile: 1; median: 6; mean: 5.47; 95th percentile: 10; max: 17). This means that the cumulative load per reviewer across 10 months is approximately 6 papers on average.

We use Semantic Scholar profiles to estimate author and reviewer “seniority”. The chart below shows the number of previous publications from Semantic Scholar for reviewers who have provided Semantic Scholar IDs (5th percentile: 3; median: 20; mean: 40.26; 95th percentile: 170). Reviewers skew less junior than authors. When the ARR EiCs invite someone to review, either the person must have 5 publications with at least one in the past 5 years, or the person must be nominated by a senior action editor, action editor or EiC. However, the statistics here also include emergency reviewers, who may be more junior.

Action editors, action editor load and action editor experience

531 unique action editor ids are associated with ARR cycles to date. The distribution of number of metareviews completed per action editor who completed at least one metareview (including metareviews of resubmissions) is shown in the chart below (5th percentile: 1; median: 10; mean: 8.67; 95th percentile: 14; max: 20).

We use Semantic Scholar profiles to estimate author and reviewer “seniority”. The chart below shows the number of previous publications from Semantic Scholar for action editors who have provided Semantic Scholar IDs (5th percentile: 5; median: 54; mean: 71.39; 95th percentile: 200). Action editors skew less junior than reviewers. Our initial pool of action editors was drawn from area chairs and senior area chairs from recent *ACL conferences plus workshop organizers in recent years, balancing as possible for diversity by geography and affiliation type (industry/academia).

ARR Today: Innovations

With ARR (a single system and process for reviewing) we have been able to satisfy several of the short-term proposals for improving reviewing and roll out other initiatives, including:

- Anonymous preprints - hosting anonymous preprints for NLP papers through OpenReview. Almost 900 papers opted in to anonymous preprints through February 2022.

- Revise and resubmit - authors may revise and resubmit their papers, which we try to match to the previous reviewers (if available) unless the authors request new reviewers. Over 850 ARR submissions through January are resubmissions. See our stats dashboard for month-by-month breakdowns of resubmissions and requests for reviewer reassignment.

- Reviews as data (with Iryna Gurevych, Ilia Kuznetsov and Nils Dycke) - authors and reviewers may opt-in to provide reviews for research (see https://openreview.net/forum?id=28n-0nBPTch).

- Reviewer mentoring (with Isabelle Augenstein and Anna Rogers) - providing mentoring to junior reviewers and training to all reviewers (see https://aclrollingreview.org/reviewertutorial).

- Responsible NLP research (with the NAACL program and reproducibility chairs) - providing a responsible NLP research checklist for authors, and training about ethics and reproducibility in NLP research (see https://aclrollingreview.org/responsibleNLPresearch/). Since December, all ARR submissions have completed the checklist. We understand the NAACL 2022 reproducibility chairs are working on an analysis.

- Ethics review (with the ACL ethics committee) - providing guidelines for ethics reviewing (https://aclrollingreview.org/ethicsreviewertutorial).

- Review statistics - providing statistics on the *ACL review process over time (http://stats.aclrollingreview.org/).

- Reviewer recognition (coming to the February cycle!) - providing recognition letters to reviewers about their service (https://aclrollingreview.org/recognition/).

ARR: Looking ahead

In 2022, ARR will be used by EMNLP and AACL-IJCNLP, INLG and SIGDIAL, and numerous *ACL workshops (https://aclrollingreview.org/dates).

Future ARR initiatives will build on the current initiatives listed above. For example, we will soon roll out:

- Review quality assessment - we are starting an initiative where authors and AEs rate review quality. This data will feed into reviewer mentoring and reviewer recognition (identifying reviewers whose reviews are outstanding).

- Submissions-over-time statistics - what happens to NLP papers? For example, how often is a typical paper resubmitted before it is committed to a venue and accepted at a venue?

- Improved reviewer assignment - Graham Neubig continues to explore ways to improve reviewer assignment.

ARR has invited permanent Senior Action Editors, each responsible for a particular area of NLP. ARR is also inviting permanent ethics reviewers.

The ACL exec will add more EiCs to ARR so that we can effectively distribute the load of managing each cycle as well as building out ethics reviewing, mentoring, and other ARR initiatives. The tech team will recruit a co-CTO.

Finally, by agreement from the ACL exec, ARR will move to a six week cycle effective April 15 2022. This will give 9 cycles per year, allow all involved to finish one cycle before the next deadline, eliminate the exceptionally troublesome "December 15th" cycle, and give reviewers more breathing room.

Concerns and Challenges

ARR is a paper-centric reviewing service, independent of any conference. It is not our job to provide acceptance/rejection decisions. Many authors feel unsure of this new process and understandably so. AEs and reviewers are also new to this rolling process and are concerned about their ongoing loads. Conference chairs are unsure of the new process and opt for hybrid mode instead of supporting ARR fully, again understandably so. However, overall this means we are constantly addressing concerns from multiple interests, some in conflict with each other (for example, authors who want immediate, in-depth reviews and reviewers who want low loads and lots of time to review).

Moving to a new submission and review infrastructure (OpenReview) has proved challenging. The OpenReview team are very collegial and helpful, but the structure of ARR is unusual for OpenReview. It lacked many of the features and functionalities we needed to implement ARR, including some of the basic automations that are needed such as reminder emails, automatic checking of resubmissions, etc. Consequently, our tech team has worked very hard to implement the various initiatives outlined above while we were rolling out ARR, including but not limited to AE assignment, reviewer assignment, etc. and to provide consistent conflict of interest detection and reviewer assignment.

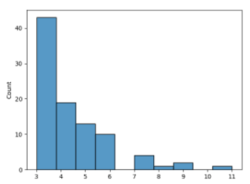

In order for peer review to work, everyone must participate. Because the *ACL community skews young (see stats above), we must train junior reviewers and continue to involve more senior people in the reviewing process. To the extent that people think they can submit many papers, insist on high quality reviews, and yet provide no reviewing service themselves, any peer review system will fail. It is critical for experienced researchers to continue to participate in peer review. The chart below shows distribution of number of submissions by authors who are qualified to review for ARR (have at least 5 papers in relevant fields with the newest no more than 5 years old) and yet have provided 0 reviews to-date (x axis: number of people with y axis: number of submissions).